Title: The Evolution of Computers: A Comprehensive History

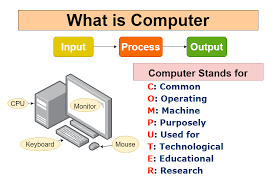

Introduction to Computers:

Computers, the indispensable devices that have transformed every facet of human existence, have a rich and intricate history spanning centuries. From rudimentary mechanical calculators to sophisticated supercomputers, the evolution of computers is a testament to human ingenuity and innovation. This article delves into the fascinating journey of computers, from their humble beginnings to their current omnipresence in modern society.

I. Pre-Modern Era:

The roots of computing can be traced back to ancient civilizations where early humans utilized tools like the abacus for basic arithmetic calculations. However, the concept of a programmable machine began to take shape in the 19th century.

II. Mechanical Calculators and Analytical Engines:

In the 17th century, Blaise Pascal invented the Pascaline, a mechanical calculator capable of performing addition and subtraction. Later, in the 19th century, Charles Babbage conceptualized the Analytical Engine, a mechanical device designed to execute complex calculations. Although never fully realized during his lifetime, Babbage’s ideas laid the groundwork for future computing advancements.

III. The Birth of Modern Computing:

The 20th century witnessed the birth of modern computing with the invention of the electronic computer. One of the earliest electronic computers, the ENIAC (Electronic Numerical Integrator and Computer), was developed in the 1940s by John Mauchly and J. Presper Eckert. ENIAC was colossal in size and primarily used for military applications, such as calculating artillery firing tables during World War II.

IV. Transistors and Integrated Circuits:

The advent of transistors in the late 1940s revolutionized computing by replacing bulky vacuum tubes with smaller, more efficient components. This led to the development of smaller and faster computers, marking the beginning of the transistor era. Furthermore, the invention of integrated circuits by Jack Kilby and Robert Noyce in the late 1950s further miniaturized electronic components, paving the way for the microprocessor revolution.

V. The Personal Computer Revolution:

The 1970s witnessed the emergence of the personal computer (PC) era, spearheaded by companies like Apple and IBM. The introduction of the Altair 8800 in 1975, followed by the release of the Apple II and IBM PC in the late 1970s and early 1980s, respectively, democratized computing and brought it into homes and offices worldwide.

VI. Advancements in Software and Networking:

Alongside hardware innovations, advancements in software played a pivotal role in shaping the evolution of computers. The development of operating systems such as UNIX, Microsoft Windows, and macOS streamlined user interaction and paved the way for the creation of diverse software applications.

VII. The Internet Age:

The advent of the internet in the late 20th century marked a paradigm shift in computing. Tim Berners-Lee’s creation of the World Wide Web in 1989 transformed the internet into a global network of interconnected computers, revolutionizing communication, commerce, and information access.

VIII. Mobile Computing and Cloud Technology:

The 21st century witnessed the rise of mobile computing with the proliferation of smartphones and tablets. These portable devices, coupled with advancements in cloud technology, enabled users to access vast computing resources and data from anywhere, at any time.

IX. Artificial Intelligence and Quantum Computing:

Recent decades have seen significant advancements in artificial intelligence (AI) and quantum computing. AI technologies such as machine learning and deep learning have empowered computers to perform complex tasks traditionally requiring human intelligence. Similarly, quantum computing, leveraging the principles of quantum mechanics, holds the promise of exponentially faster computation and groundbreaking applications in various fields.

X. Future Prospects:

As we venture further into the 21st century, the future of computing appears boundless. Emerging technologies such as neuromorphic computing, bio-computing, and quantum AI herald a new era of innovation, promising to reshape the very fabric of our existence.

Conclusion:

The evolution of computers from primitive calculating devices to powerful machines has been nothing short of extraordinary. With each technological leap, computers have become more ubiquitous, capable, and integral to our lives. As we continue to push the boundaries of what is possible, the journey of computing remains an ever-evolving saga of human creativity and discovery.

Certainly! Let’s explore some additional information about computers:

I. Types of Computers:

- Personal Computers (PCs): Designed for individual use, these computers come in various forms such as desktops, laptops, and tablets.

- Workstations: High-performance computers optimized for professional use, often employed in fields like engineering, design, and scientific research.

- Servers: Computers dedicated to providing services or resources to other computers, such as hosting websites, storing data, or managing networks.

- Mainframes: Powerful computers capable of handling large-scale processing and data storage, commonly used in enterprise environments for critical applications.

- Supercomputers: The most powerful and fastest computers, utilized for complex simulations, scientific research, and weather forecasting.

II. Components of a Computer:

- Central Processing Unit (CPU): The “brain” of the computer responsible for executing instructions and performing calculations.

- Memory (RAM): Temporary storage used by the CPU to hold data and instructions while processing tasks.

- Storage Devices: Devices like hard disk drives (HDDs) and solid-state drives (SSDs) used for long-term data storage.

- Motherboard: The main circuit board that connects and integrates various components of the computer.

- Input and Output Devices: Devices such as keyboards, mice, monitors, and printers used to interact with the computer and receive or display information.

III. Operating Systems:

- Windows: Developed by Microsoft, Windows is one of the most widely used operating systems for PCs, known for its user-friendly interface and compatibility with a wide range of software.

- macOS: Developed by Apple, macOS is the operating system used in Apple’s Mac computers, known for its sleek design and seamless integration with other Apple devices.

- Linux: An open-source operating system popular among developers and tech enthusiasts for its flexibility, security, and vast community support.

- Unix: A family of multitasking, multi-user operating systems used primarily in servers and workstations, known for its stability and robustness.

IV. Computer Networks:

- Local Area Network (LAN): A network that connects devices within a limited geographical area, such as a home, office, or school.

- Wide Area Network (WAN): A network that spans a large geographical area, typically connecting multiple LANs or other networks across long distances.

- Internet: A global network of interconnected computers and devices, enabling communication and information exchange worldwide.

- Wireless Networks: Networks that utilize wireless technology, such as Wi-Fi and Bluetooth, for data transmission between devices without the need for physical cables.

V. Security and Privacy:

- Antivirus Software: Programs designed to detect, prevent, and remove malicious software (malware) such as viruses, worms, and trojans.

- Firewalls: Security mechanisms that monitor and control incoming and outgoing network traffic, protecting against unauthorized access and cyberattacks.

- Encryption: The process of encoding data to prevent unauthorized access, ensuring confidentiality and privacy, commonly used in communication, storage, and online transactions.

VI. Ethical and Social Implications:

- Digital Divide: Disparities in access to and proficiency with technology, resulting in unequal opportunities and outcomes for individuals and communities.

- Privacy Concerns: Issues surrounding the collection, storage, and use of personal data by corporations and governments, raising questions about surveillance, consent, and data protection.

- Cybersecurity Threats: Risks posed by cyberattacks, data breaches, identity theft, and other malicious activities, highlighting the importance of robust security measures and awareness.

- Automation and Job Displacement: The impact of automation and artificial intelligence on employment, as machines increasingly perform tasks traditionally done by humans, leading to job displacement and economic disruption.

VII. Emerging Technologies:

- Internet of Things (IoT): The interconnectedness of everyday objects via the internet, enabling smart devices, home automation, and data-driven insights.

- Augmented Reality (AR) and Virtual Reality (VR): Immersive technologies that blend digital content with the real world (AR) or create entirely virtual environments (VR), revolutionizing entertainment, education, and various industries.

- Blockchain: A decentralized, secure ledger technology underlying cryptocurrencies like Bitcoin, with potential applications in finance, supply chain management, and digital identity verification.

- Quantum Computing: A revolutionary computing paradigm leveraging the principles of quantum mechanics to perform complex calculations exponentially faster than classical computers, promising breakthroughs in cryptography, optimization, and scientific research.

VIII. Environmental Impact:

- Energy Consumption: The significant energy requirements of computing infrastructure, including data centers, contribute to carbon emissions and environmental degradation.

- E-Waste: The disposal of electronic waste, including obsolete or discarded computers and peripherals, poses environmental hazards due to toxic components and improper recycling practices.

- Green Computing: Initiatives aimed at reducing the environmental footprint of computing technologies through energy-efficient hardware, sustainable practices, and responsible disposal and recycling.

IX. Future Trends and Challenges:

- Quantum Supremacy: Achieving practical quantum computing capabilities surpassing classical computers in specific tasks, opening new frontiers in computation, cryptography, and material science.

- AI Ethics and Governance: Addressing ethical concerns surrounding the development and deployment of artificial intelligence, including bias, accountability, and transparency, to ensure responsible AI governance.

- Cybersecurity Resilience: Strengthening defenses against evolving cyber threats through advanced detection methods, secure-by-design practices, and international cooperation to combat cybercrime and safeguard digital infrastructure.

- Digital Inclusion: Bridging the digital divide by expanding access to technology, promoting digital literacy, and fostering equitable participation in the digital economy, ensuring that no one is left behind in the digital age.

In summary, computers have become indispensable tools shaping every aspect of our lives, from communication and commerce to education and entertainment. Understanding the various components, technologies, and societal implications of computing is crucial for navigating the complexities of the digital world and harnessing the transformative power of technology for the benefit of humanity.